According to foreign media reports, imagine that this year is 2022. You are sitting in a self-driving car and follow a daily routine. Before you came to a stop sign, this place has already passed hundreds of times. However, this time, the car actually opened directly.

In your opinion, this stop sign looks just like no other. But for cars, it is quite different from other parking signs. What you and the car don't know is that just a few minutes ago, a street artist put a small sticker on the logo. The human eye can't notice it but it can't escape the machine's "eye". In other words, this little sticker makes the car “see†the stop sign as a completely different sign.

This sounds far away from us. However, recent studies have shown that artificial intelligence can easily be fooled by similar methods and that “seeing†has a huge deviation from human eyes. As machine learning algorithms become more commonplace in the transportation, financial, and medical systems, computer scientists hope to find ways to counter these attacks before they are truly hands-on.

“The field of machine learning and artificial intelligence is very worried about this issue, not to mention that these algorithms are being used more and more universally.†Daniel Lowd, assistant professor of computer and information science at the University of Oregon, points out, “If it’s just leaking It's no big deal to sign a spam, but if you're sitting in a self-driving car, you have to make sure that the car knows where to go and doesn't hit anything, so the risk is naturally much higher."

Whether the smart machine will malfunction or be controlled by hackers depends on the way the machine learning algorithm "understands" the world. If the machine is disturbed, you may look at the panda as a gibbous arm or look at the school bus as an ostrich. An experiment conducted by French and Swiss researchers showed that such interference can cause a computer to see a squirrel as a grey fox or a coffee pot as a parrot.

How is this achieved? Consider the process of children’s learning numeracy: When children observe numbers, they will notice the common characteristics of different numbers, such as “1 is thin and tall, 6 and 9 have a big ring, 8 There are two "etc. After seeing enough numbers, even if the fonts are different, children can quickly recognize new figures such as 4, 8, and 3.

The process of machine learning algorithms to understand the world is similar to this. To make the computer detect something, scientists will enter hundreds of instances into the computer. When the machine screens these data (eg, this is a number; this is not a number; it is a number; it is not a number) and the characteristics of the information can be gradually understood. Soon, the machine can accurately conclude that "the number is 5 on the picture."

From numbers to cats, from ships to faces, children and computers have used this method to learn to identify various objects. But unlike humans and children, computers do not pay much attention to high-level details, such as the furry ears of cats or the unique triangular structure of number 4. What the machine "sees" is not the picture as a whole but the individual pixels in the picture. Take the number 1 as an example. If most numbers 1 have black pixels in one location and a few white pixels in the other location, the machine will make a decision only after checking the pixels. Say back to the stop sign. If a certain pixel of the logo shows a change that is not easily noticeable by the naked eye, that is what experts call “interferenceâ€, the machine will see the stop sign as something else.

Similar research has been conducted at the University of Wyoming and Cornell's Evolutionary Artificial Intelligence Laboratory, creating a series of visual illusions in artificial intelligence. The abstract patterns and colors in these pictures are meaningless to the human eye, and computers can quickly identify them as snakes or rifles. This shows that artificial intelligence "in the eyes" of the object may be very different from the actual situation.

This defect exists in various machine learning algorithms. “Each algorithm has its own loopholes,†said Yevgeniy Vorobeychik, assistant professor of computer science and computer engineering at Vanderbilt University in the United States. “We live in an extremely complex multidimensional world where algorithms are Can only focus on a small part of them.†Vorobchenko “convinced that†if these loopholes do exist, sooner or later someone will develop ways to exploit the loopholes. Some people may already have done so.

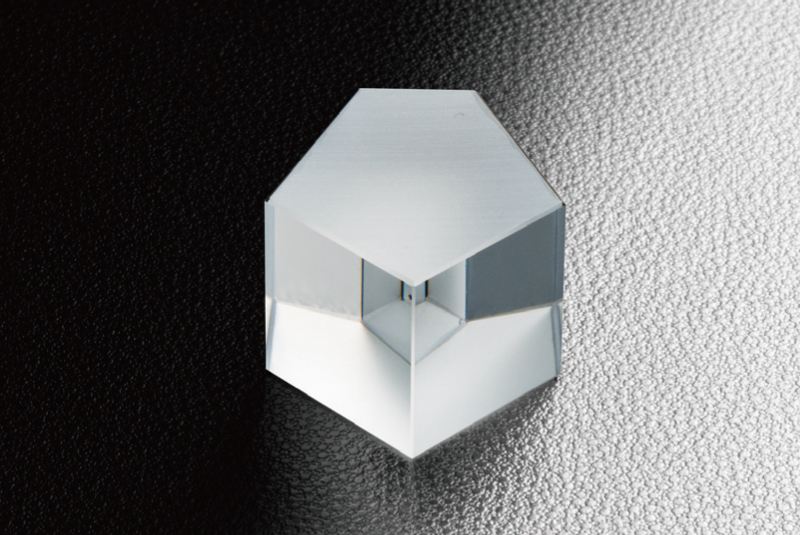

1 2 Next>Pentagonal prism is one of the beam steering devices with fixed angle (90 °). It has two purposes: first, the outgoing light turns the incident light to a certain angle (90 °) regardless of the incident angle on the first side; Second, unlike a right angle prism, its image has neither rotation nor specular reflection. Pentaprism is commonly used in camera viewfinder, image observation system or measuring instrument.

Pentagonal Prism,Pentagonal Rectangular Prism,Pentagonal Pentaprism,Optical Pentagonal Prism

Hanzhong Hengpu Photoelectric Technology Co.,Ltd , https://www.hplenses.com